━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 1 – Introduction to the module

What is a trend?

In the context of Visual Effects, a trend refers to a prevailing style, technique, or technology that is gaining momentum and being widely adopted in the industry over a particular period. It represents a shift in how visual effects are created, perceived, or applied in media production, often driven by new technologies, audience expectations, creative innovation, or advancements in hardware and software. Trends in VFX can span several aspects, including specific techniques (e.g., particle simulations, motion capture), aesthetics (e.g., photorealistic rendering), or even conceptual themes (e.g., deepfake technology or virtual humans).

How do we know when a trend is emerging?

- Widespread Adoption: When a specific technique or style begins to appear in multiple high-profile projects (films, TV shows, video games, etc.) over a short period, it’s a strong indication that it’s becoming a trend.

- Innovative Technology or Software: The introduction of new tools or platforms that enable previously impossible effects can lead to the rapid adoption of a new VFX technique. For example, the rise of real-time rendering with game engines like Unreal Engine has influenced both film and video game industries, allowing for more immersive and interactive visuals.

- Creative Shift: When artists, directors, or studios begin to experiment with a new visual language or artistic approach that resonates with audiences, it can lead to a wave of similar productions. The growing use of stylized, 3D-animated characters, for example, has been a trend in the animation industry.

- Audience and Critical Reception: Positive reactions from audiences and industry critics can accelerate the adoption of a specific visual technique, as it signals that the effect resonates well with viewers, which can set a new standard.

What are the current trends of VFX?

Real Time Rendering

What it is:

Real-time rendering is the process of generating graphics instantly during the production process, allowing for immediate feedback. Unlike traditional rendering, which can take hours or days for a single frame, real-time rendering displays the visual output as soon as the computation happens. This is largely driven by advancements in game engines like Unreal Engine and Unity.Key Applications:

Film and TV production: Filmmakers can visualize environments and scenes live on set, integrating CGI elements and interacting with them in real-time.

Video games: Used for creating immersive, dynamic, and interactive worlds.

Virtual events: Live streams, concerts, and sports events are increasingly leveraging real-time rendering for virtual effects.

Example:

The Mandalorian (2019): The use of real-time rendering with Unreal Engine enabled the series to showcase realistic environments on large LED screens during production, instead of relying on post-production CGI.

Virtual Production

What it is:

Virtual production combines digital tools and traditional filmmaking techniques, using real-time VFX to allow for interaction between live-action footage and virtual environments. Filming with virtual sets or environments on LED screens or green screens offers seamless integration between the physical and digital worlds.Key Applications:

- Filmmaking: Filmmakers can shoot scenes with virtual sets, landscapes, or backdrops in real-time, making the integration of CGI much smoother.

- TV Series: The ability to capture dynamic lighting, reflections, and interactions between live actors and virtual elements.

- Commercials and Music Videos: Cost-effective

- solutions for environments that would be otherwise difficult or impossible to create.

Example:

- The Mandalorian (2019): Used the Stagecraft system with large LED screens to project 3D environments around the actors, allowing real-time adjustments to lighting, reflections, and virtual environments, all while the scene was being filmed.

Why it’s a trend:

-

Enhances actor performance and interaction with realistic digital environments.

-

Reduces location shooting and set-building costs.

-

Increases production speed with faster set-ups and less reliance on post-production.

Deepfake Technology and AI assisted VFX

What it is:

Deepfake technology uses artificial intelligence (AI) and machine learning to create hyper-realistic alterations to video and images. This technology can be used to swap faces, alter performances, or even de-age actors. It’s being used increasingly in film and advertising, as well as in digital media.

Key Applications:

- De-aging of actors: Recreating younger versions of actors (e.

- g., Robert De Niro in The Irishman).

- Digital recreation of actors: Bringing deceased actors back to life or continuing a character’s story after the actor is no longer available.

- Entertainment and advertising: Virtual influencers or marketing campaigns using AI-generated characters.

Example:

- The Irishman (2019): Used deepfake technology to digitally de-age actors, allowing them to portray younger versions of themselves with startling realism.

- Star Wars: Rogue One (2016): Used deepfake technology to digitally resurrect Peter Cushing as Grand Moff Tarkin, and re-created Carrie Fisher as Princess Leia.

Why it’s a trend:

- It allows filmmakers to craft incredibly realistic digital doubles, making characters look younger or appear as different people.

- Offers an efficient and creative solution for certain storytelling needs.

- It has become more accessible due to AI advancements and improved VFX software.

Photorealistic CGI characters and creatures

What it is:

Photorealism in CGI refers to creating digital characters or creatures that look indistinguishable from real-life actors or animals. This trend is driven by advancements in rendering technology, such as ray tracing and improved motion capture.Key Applications:

- Live-action films: Characters or creatures that seamlessly blend into live-action scenes.

- Animated films: Creating highly detailed characters with photorealistic textures and movements.

- Video games: Enhancing player immersion with realistic NPCs (non-player characters) and environments.

Example:

- Avatar: The Way of Water (2022): Pushed the boundaries of realism in digital characters and creatures. The motion capture of actors, combined with photorealistic CGI, created some of the most lifelike computer-generated creatures and environments in film history.

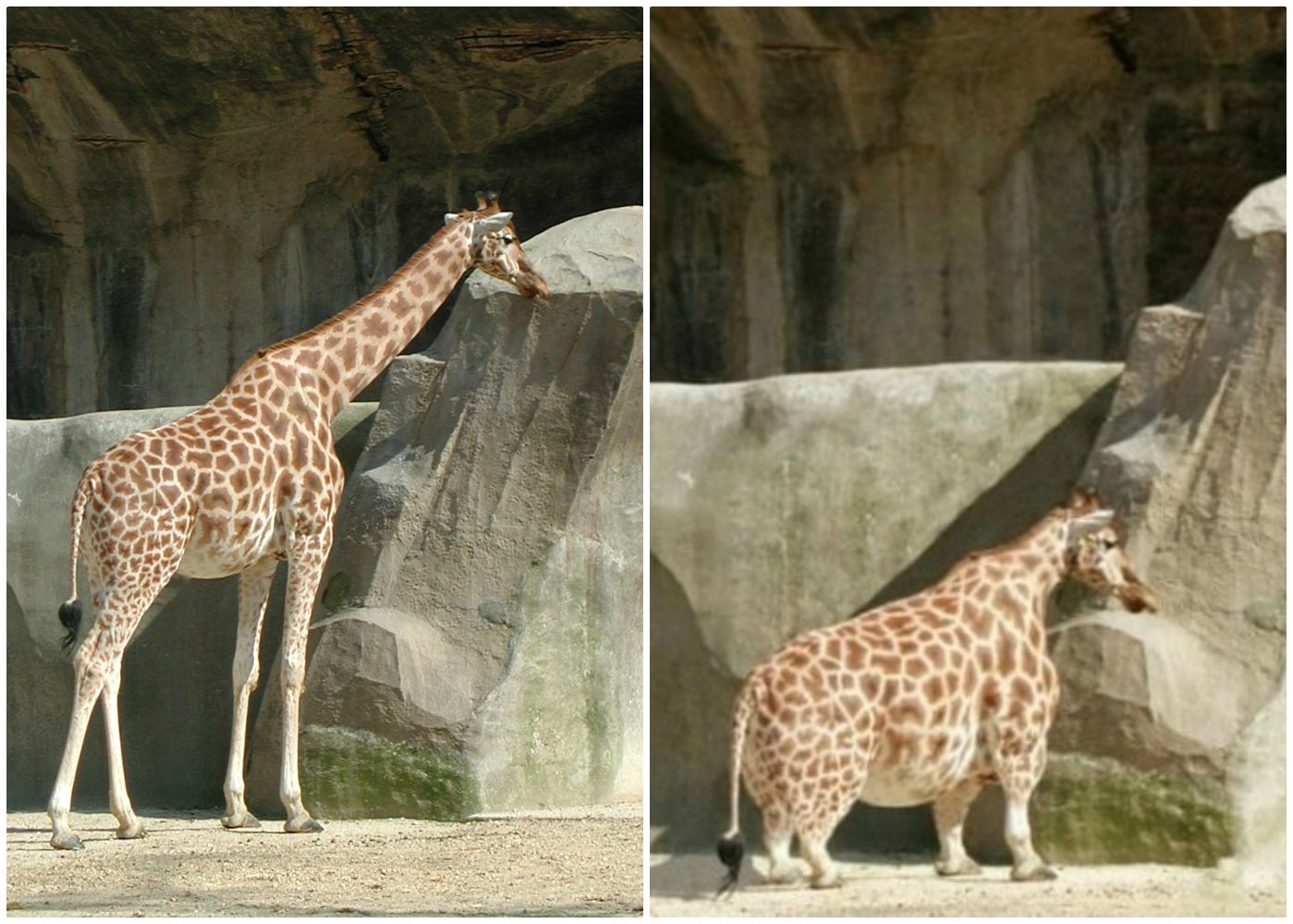

- The Lion King (2019): A hyper-realistic version of the beloved animated classic, featuring photorealistic CGI animals that looked almost indistinguishable from real wildlife.

Why it’s a trend:

- The increasing capability of rendering engines to produce photorealistic visuals.

- Creates more immersive experiences, especially in films and games.

- Demands for high-quality visual storytelling that doesn’t sacrifice detail or realism.

Motion Capture and Performance Capture Advancements

What it is:

Motion capture (mocap) technology records the movements of live actors and translates them into digital characters, while performance capture (perf-capture) goes further by recording facial expressions, voice, and emotion. This technology has dramatically improved the realism and depth of CGI characters.Key Applications:

- Film and TV: Capturing the movements and expressions of actors to animate digital characters.

- Video Games: Realistic character movements and emotions in games.

- Virtual Avatars: Used in VR and AR to create lifelike avatars for digital interaction.

Example:

- Avatar (2009) and Avatar: The Way of Water (2022): Both films used motion and performance capture to create the Na’vi characters, capturing every subtle nuance of the actors’ movements and facial expressions.

- The Last of Us Part II (2020): The game’s lifelike character animations were achieved through advanced motion and performance capture, which created an emotional depth rarely seen in video games.

Why it’s a trend:

- As capture technology becomes more accurate, it’s easier to create characters that respond naturally and expressively.

- The blending of performance and CGI enables more nuanced storytelling in both films and games.

- Advances in real-time mocap tools allow more immediate and seamless integration into productions.

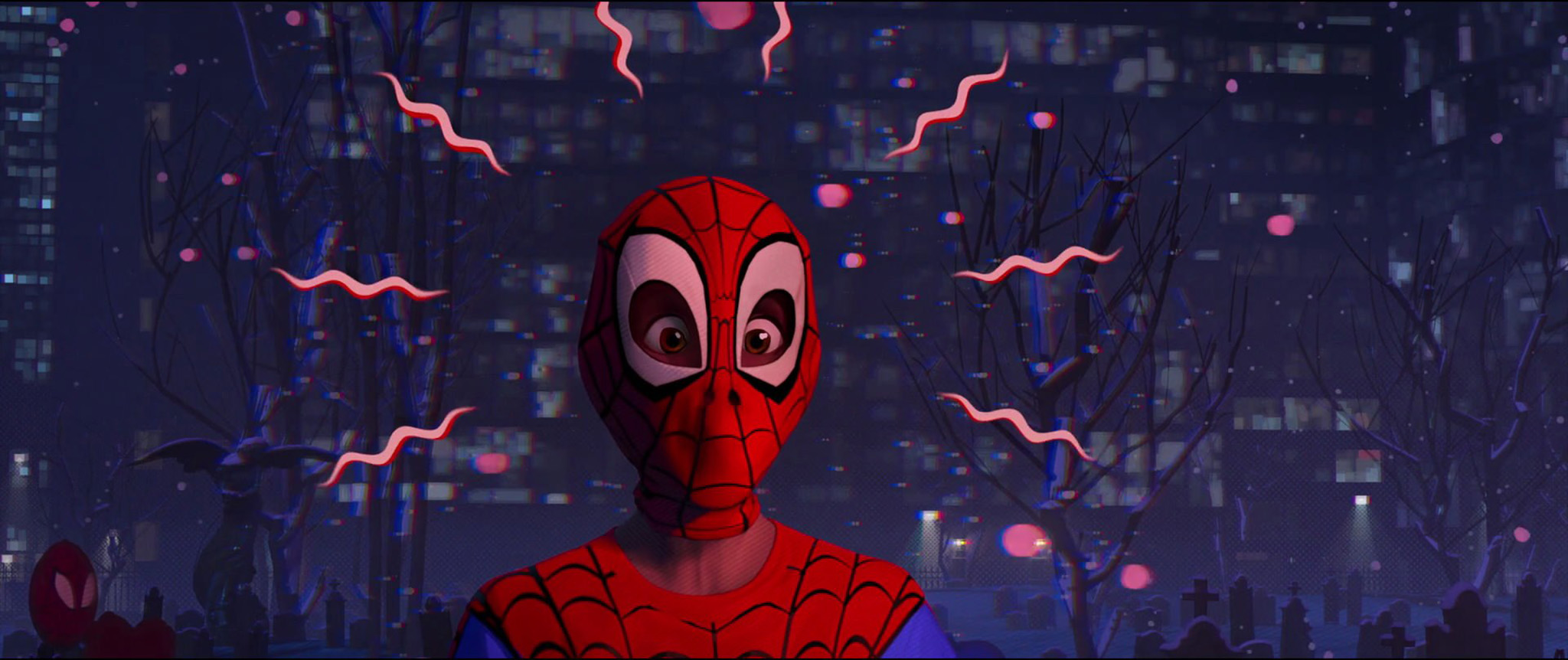

Hyper Stylized Visuals in Animation

What it is:

A trend towards distinctive, artistic, and visually unique animation styles that differ from the traditional CGI realism. These animations often combine 2D elements with 3D rendering, bold colors, and unconventional visuals to create a unique look.Key Applications:

- Animated Films: Creative visual styles that depart from realism and explore new art forms.

- Commercials and Music Videos: Eye-catching and innovative styles that grab attention.

- Video Games: Games using distinct, artistic visual designs to set them apart from photorealistic games.

Example:

- Spider-Man: Into the Spider-Verse (2018): The film used a groundbreaking combination of 3D animation and 2D comic book-style visuals, creating a vibrant, unique aesthetic that pushed the boundaries of animated films.

- Mitchells vs. the Machines (2021): A similar approach, blending 3D animation with hand-drawn textures and expressive designs, creating a visually engaging and original look.

Why it’s a trend:

- Filmmakers and animators are embracing more creative freedom, exploring new ways to tell stories visually.

- Audiences are seeking more distinctive, memorable visuals that stand out in a crowded media landscape.

- Advances in animation technology have made it easier to experiment with new techniques and looks.

Virtual Humans and Digital Doubles

What it is:

Virtual humans, or digital twins, are hyper-realistic, computer-generated replicas of real people or entirely fictional characters. They are often powered by AI and machine learning to mimic human behavior and speech in real time.Key Applications:

- Advertising and Marketing: Virtual influencers like Lil Miquela have become popular in digital spaces.

- Video Games and VR: Creating lifelike avatars for interactive media experiences.

- Entertainment and Films: Digital actors or characters that are indistinguishable from humans.

Example:

- Lil Miquela: A virtual influencer who has a massive following on social media, creating a new form of digital celebrity.

- K-pop Group Aespa: The members of this group perform alongside digital avatars of themselves, creating a hybrid virtual/live performance.

Why it’s a trend:

- The increasing realism of CGI and AI-driven avatars offers new possibilities for marketing, entertainment, and digital engagement.

- Virtual humans allow for new forms of interaction, creating novel experiences for audiences and consumers.

The age of the image

Why do we photograph things?

Why are we addicted to images?

Edgerton, his work and his impact on visual effects;

Visual effects in films that could’ve been inspired by edgertons work:

Gravity

https://youtu.be/FZfOvvGV5Q4?si=1gXh5a9rYgMNuAcI

Written Post 1 – What do think Dr James Fox means by his phrase ‘The Age of the Image’

In Dr. James Fox’s documentary Age of the Image: A New Reality, he introduces the concept of “The Age of the Image,” suggesting that modern-day society has become reliant on and addicted to images.

Fox points out that every historical era is defined by unique characteristics: the 18th century is recognized as the age of philosophy, and the 19th century is the age of the novel. In this context, he claims our current age is dominated by images.

While images have existed since ancient times, the past century has seen a massive increase in their quantity and accessibility due to advancements in photography. This shift has transformed how we communicate, express ourselves, and make sense of the world.

Fox emphasizes that we now document nearly every aspect of our lives through images, significant or not. In the past, photography preserved cherished memories, but with modern technology, it has shifted toward validation and proof. The introduction of the Brownie camera in 1900 revolutionized photography, making it accessible to the masses and turning stiff portraits into snapshots of genuine emotions and everyday moments. In our current age, the ease of capturing images with smartphones has led to photographs holding less meaning. Instead of serving as moments of frozen past, they often become evidence of experiences.

In conclusion, Dr. James Fox’s phrase “The Age of the Image” describes a transformative period where images have become central to our understanding of the world. Their accessibility and manipulation have reshaped how we capture, perceive, and share experiences. While images remain vital for expression, they also challenge our understanding of reality and meaning.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 2 – The Photographic Truth Claim – Can we believe what we see?

The Allegory of the Cave

The Allegory of the Cave encapsulates several core aspects of Plato’s philosophy in a concise and accessible parable. It explores themes of knowledge, perception, and enlightenment, offering profound insights into human nature and the process of learning.

In the allegory, the prisoners represent common people—individuals who are confined to a limited understanding of the world. They are trapped in a cave, forced to face a wall where shadows are cast by objects behind them. These shadows symbolize the distorted reality that many people experience, as they only know what they can see and perceive, without a true understanding of the world around them. The cave, in this sense, represents the world we live in, filled with illusion and misperception, where individuals often rely on preconceived notions or surface-level experiences.

The prisoners’ ignorance is not necessarily their fault—it is their starting point. They do not know that there is more to reality because they have never seen beyond the shadows on the wall. This represents how people, by nature, are often limited by their own experiences, conditioning, and environment. Enlightenment, or true knowledge, requires breaking free from these limitations, but this process cannot be rushed or forced.

One of the central ideas of the allegory is that you cannot simply impose your point of view upon others. Instead, patience and empathy are key in guiding others toward greater understanding. The philosopher or enlightened individual, upon discovering the truth outside the cave, cannot force others to accept the truth. The allegory suggests that meaningful change comes through cooperative dialogue, where differing perspectives can be discussed openly and critically. By fostering a space for reflection and critical thinking, we encourage individuals to question their assumptions and explore deeper truths.

Ultimately, the allegory highlights the importance of intellectual growth, self-awareness, and the transformative power of knowledge, while also emphasizing the value of patience and dialogue in the pursuit of enlightenment. Through this method, we can stimulate critical thinking and open the path for others to move beyond the shadows of their own limited perceptions.

Plato’s Allegory of the Cave also offers profound insights into perception, reality, and enlightenment, themes that have been explored extensively in visual storytelling and VFX across various forms of media, from early cinema to modern video games and virtual reality (VR). While Plato’s allegory isn’t directly about visual effects, its core concepts have been skillfully represented in the way we perceive and interact with images in contemporary media.

Representation of Plato’s allegory in visual storytelling and vfx

In Plato’s allegory, the prisoners are shackled in a cave, only able to see shadows cast on a wall, believing these shadows to be their entire reality. This reflects how we, as viewers, perceive what is presented to us through visual storytelling—whether in film, television, or even video games. In this context, visual effects (VFX) play a key role in shaping these perceptions. Just as the prisoners mistake shadows for reality, audiences are often “tricked” into seeing VFX-generated imagery as real. The powerful illusion of VFX creates a constructed world where the audience accepts what they see on screen as reality, even though they know it is a fabricated representation.

Example:

Early cinema, such as the famous scene in L’Arrivée d’un Train en Gare de La Ciotat (1896), represents the first cinematic use of illusions. When the audience saw the image of a train approaching the camera, many viewers, unfamiliar with cinema, were shocked and believed the train was about to crash into them. This is analogous to Plato’s cave: the viewers were seeing a representation of reality (the train) but mistook it for something they would experience in the physical world.Similarly, animals in early television, seeing moving images on a screen, often mistake them for real-life objects, unable to discern the difference between reality and the images projected on the screen. This reflects the way the prisoners in the cave cannot distinguish between the shadows on the wall and the true world outside.

In modern contexts, such as virtual reality (VR) or video games, the allegory’s themes are even more pronounced. In VR, the user is immersed in an entirely different version of reality, which, although artificial, feels entirely real to the participant. Just as the prisoners in the cave are bound by their limited perceptions, VR offers an alternative reality, one that can be manipulated and shaped by designers. These immersive experiences trick the mind into believing that the virtual world is the true environment, reflecting Plato’s notion of the world being a mere representation of deeper truths.

How VFX relates to Plato’s allegory?

Can you think of any examples of where this is happening?

Where are images becoming more like reality and reality becoming more like image.

- Realistic CG animals

3D Creature Artists use real life pictures of animals for reference and then virtually bring it to life, just so that the audiences can think that the virtually brought to life animals are actually real

it takes a loop

Recreating historic events or places through the use of vfx

Final result

Final result

Final result

Architectual visualisation

Social Media – manipulating images

removing tourists from pictures

Deepfakes

The Photographic Truth Claim

The index oints towards an object that existed b efore the elens

Photographs as Indexical signs

Photograph holding a trace or footprit or fingerprint of reality

Referred to as indexicality

Photographic Index – Andre Bazin

The case for photography as an indexical medium was advanced by Andre Bazin in his famous paper the Ontology of the Photographic Image (1960)

Whilist Bazin did not refer to the term index, he asserted that the invention of photography wasthe most important invention of the plastic arts

Semiotics

- Icon – a sign that resembles or imitates what it represents. For instance, a photograph of a tree is an icon of a tree because it visually resembles it.

- Index – refers to a sign that has a direct, causal connection to what it represents. For example, smoke is an index of fire; the presence of smoke indicates that there is fire.

- Symbol –

Analysing the impact of VFX on Photographic Truth

In this activity, please search for VFX heavy images and analyze how visual effects challenge the photographic truth claim (in the images) using semiotic concepts.

Analyze an image

-

indexicality – Are there any traces of the real world, or is it fully fabricated?

-

iconicity does it resemble reality convincingly or is it fully fictional

-

photographic truth does it claim to represent reality and how does this challenge the traditional truth claims

Jurassic World

-

indexicality – yes, the environment and the human

-

iconity – fully fictional but at the same time it represents how real dinosaurs could look like in the past convincingly

-

photographic truth – it’s a photorealistic scene but it’s fictional because of the addition of dinosaurs

What do you think is meant by Photography Truth Claim?

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 3 – Faking photographs: Image manipulation, computer collage and the impression of reality

”One way or another, a photograph provides evidence about a scene about the way things were and most of us have a strong intuitive feeling that it provides better evidence than any other kind of picture

We feel that the evidence it presents corresponds in some strong sense to reality and in accordance with the correspondence theory of truth that it true because it does so” – Mitchel 1998 p.24

Faced with an image on a screen we no longer know if the image testifies to the existence of that which it depicts or if it simply constructs a world that has no undefended existence cassetti 2011

The quotes from Mitchell (1998) and Cassetti (2011) highlight a profound shift in how we perceive images, especially in the context of photography and visual media. They touch upon the tension between the truth a photograph is believed to represent and the potential for images—whether photographs or computer-generated visuals—to create realities that may not exist in the physical world.

The Photographic Image as evidence of reality (Mitchell 1998)

In Mitchell’s work, he points out that photographs have long been viewed as reliable evidence of the world as it is, offering a direct connection to reality. People often feel that a photograph is a truthful representation of what was in front of the camera at the moment it was taken. This perception is rooted in the correspondence theory of truth, which asserts that something is true if it corresponds to the way things really are in the world.

For example, when we look at a photograph, we intuitively believe that it provides evidence about the scene it depicts—the way things were at a particular time and place. Photographs, in this sense, seem to offer a window into the past, capturing the “truth” of a moment, unmediated by the manipulation of the photographer (or at least, minimally manipulated). This belief is so ingrained in our visual culture that photographs have a sort of “authenticity” that other forms of imagery, such as paintings or drawings, do not possess to the same degree.

However, this view begins to unravel when we consider the manipulations and constructions behind the creation of any image, whether it’s a photograph or a digitally rendered visual in modern media.

The crisis of certainty in visaul media (Cassetti 2011)

Cassetti’s quote introduces a more critical perspective. As technology advances, particularly with the rise of digital media, visual effects (VFX), and computer-generated imagery (CGI), we are faced with images that can no longer be easily trusted as representations of real, existing things. Today, an image might no longer testify to the existence of something in the world. Instead, it may be the product of a constructed world—one that has no real counterpart in the physical world at all.

This idea taps into the postmodern concern about the collapse of distinctions between reality and representation. With VFX and digital technologies, filmmakers and artists can create hyper-realistic worlds, characters, and objects that appear “real,” but are entirely fictional or digitally generated. The illusion of reality can be so convincing (e.g., in films like Avatar or The Matrix) that we start to question whether the images we are presented with correspond to anything outside of the screen.

For example, in VR environments, players might interact with digital objects or people that feel real in the moment but have no tangible existence in the physical world. The same is true for highly realistic computer-generated imagery in movies, where a character might look, move, and behave exactly like a real person, but be entirely made of pixels.

Cassetti’s point is that in such contexts, we face a crisis of certainty: Can we still trust the image to convey truth? Are these constructed worlds simply illusions, or do they represent new forms of reality? As technology progresses, the line between the real and the imaginary becomes increasingly blurred, and the “truth” that images present becomes more subjective, questioning whether an image really serves as a reliable testimony to the world or if it simply constructs a reality that we willingly accept.

The role of photography in digital age

With the ubiquity of social media and digital photography, many people now have access to advanced editing tools that can manipulate images, further complicating our relationship with photographic truth. The public’s increasing awareness of deepfake technology and image manipulation adds to this uncertainty, as we become more skeptical of whether any image—whether a photograph or a video—accurately reflects reality.

In a digital age, images are often created, modified, and shared without direct reference to actual events. They may represent a constructed version of reality, a curated truth, or a fictional world altogether. This erosion of trust in images as evidence of reality challenges the long-held belief that photographs are an unmediated reflection of the world.

The philosophical implications

The tension between the belief that photographs are truthful and the understanding that images can construct alternative realities invites deeper philosophical questions about the nature of truth and representation. In a world where images are no longer guaranteed to represent reality, we are forced to question how we define truth itself.

- Is truth simply what we see?

Mitchell’s perspective aligns with the correspondence theory of truth, where the image corresponds to reality and is accepted as true because it reflects what actually existed. Yet, as technology advances, this model becomes more complicated. - Or is truth constructed by the image?

Cassetti’s view suggests a more constructivist approach: that images, particularly digital ones, are not mere reflections of the world but active constructions that shape our perception of reality. If reality can be constructed so convincingly through VFX and digital media, does it change our perception of what is “real”?

Conclusion

Mitchell (1998) and Cassetti (2011) highlight a profound shift in how we understand and interact with images. The trust we once placed in photographs as truthful representations of reality has been undermined by the increasing sophistication of digital technologies that create images that may or may not correspond to the physical world. This shift challenges our traditional understanding of truth and representation and invites us to reconsider the role of images in shaping our understanding of reality.

In the context of modern visual storytelling, VFX, photography, and digital media play crucial roles in this transformation. The power of images to construct, manipulate, and question reality challenges the intuitive belief that what we see on screen corresponds to the truth. As technology continues to advance, we may have to rethink the very nature of “truth” and “reality” in the visual world.

Hoax Photography

The examples of hoax photography like the Cottingley Fairies and the Loch Ness Monster (Nessie) are powerful illustrations of how photographs and other images can be manipulated to present false or fantastical narratives, creating illusions that people often believe to be true. These cases show how easily visual representations can shape public belief, even when the images themselves are later debunked.

The Cottingley Fairies

The Cottingley Fairies are one of the most famous examples of photographic hoaxes in history. In 1917, two young cousins, Elsie Wright and Frances Griffiths, from Cottingley, England, took a series of photographs that appeared to show them interacting with fairies. The photos featured small, ethereal creatures seemingly flying or standing near the girls. The images were so convincing at the time that they garnered significant attention and were taken seriously by many, including prominent figures like Arthur Conan Doyle, the creator of Sherlock Holmes, who was a strong believer in spiritualism and the supernatural.

For many years, the photos were thought to be authentic, and the idea that the girls had photographed real fairies was widely accepted by some sections of the public. However, in 1983, the two women admitted that the fairies in the photos were paper cutouts, and the entire hoax had been orchestrated for fun. Despite their admission, the Cottingley Fairies hoax had a lasting cultural impact, demonstrating how photographs—whether manipulated or genuine—can influence perceptions of reality. The belief in the photographs’ authenticity was largely driven by their strong emotional impact and the trust placed in photographic evidence.

Key Point: The Cottingley Fairies case highlights how photographs can be misinterpreted as undeniable evidence of reality, even when the images themselves are fabricated. It also illustrates how, once an image is presented as truth, it can be difficult to convince the public otherwise, especially when the visual evidence aligns with pre-existing beliefs.

Cottingley Fairies

The Loch Ness Monster

The Loch Ness Monster, affectionately known as Nessie, is another well-known example of a photographic hoax that captivated the public’s imagination for decades. The legend of Nessie dates back centuries, but the most famous photograph—often referred to as the “Surgeon’s Photo”—was taken in 1934 by a man named Dr. Robert Kenneth Wilson, a London physician. The photograph allegedly showed a large creature emerging from the waters of Loch Ness in Scotland, with a long neck and humps in the water, resembling descriptions of the Loch Ness Monster.

For many years, the “Surgeon’s Photo” was considered one of the best pieces of evidence supporting the existence of Nessie. It appeared to provide photographic proof of the creature’s existence. However, in 1994, a man named Christian Spurling, who had been involved in the hoax, admitted that the photograph was a staged event. The photo was created using a toy submarine with a model of a creature attached to it. Spurling revealed that the photo had been intentionally manipulated to create the illusion of a large, mysterious creature in Loch Ness, feeding into the growing mythology around the Loch Ness Monster.

Key Point: The “Surgeon’s Photo” is a prime example of how an image can be crafted and manipulated to fuel a narrative, and how it can be widely accepted as evidence of something extraordinary, even when it is later revealed to be a hoax. The belief in the Loch Ness Monster continues to persist in popular culture, demonstrating how powerful visual representations can shape myth and legend.

Loch Ness Monster

Loch Ness Monster

Marilyn Monroe And Elizabeth Taylor

1945 photo of soldiers raising the Soviet flag over Berlin’s Reichstag building was staged and then doctored.

Digital Fakes

Traditional Matte Paintings

Traditional matte paintings are detailed, hand-painted backgrounds used in film and television to create the illusion of expansive environments. Typically painted on glass or canvas, these artworks are integrated with live-action footage to produce seamless visuals. While the technique was widely used in early cinema, it has largely been replaced by digital methods today, although many principles of traditional matte painting remain relevant in modern filmmaking. Iconic films like Star Wars and The Lord of the Rings showcase the enduring impact of this art form on visual storytelling.

- Painting on a large sheet of glass or canvas

- Leave gaps in specific positions

- Film the painting with the gaps blacked out

- Live action filmed separately and projected into those gaps

- Combining the matte painting and live action together seamessly

Examples of traditional matte paintings:

Lord of the Rings

- Titanic

Star Wars

Blending reality & VFX seamlessly

The evolution of special effects in cinema

The development of photomontage techniques has been pivotal in the evolution of film and special effects. Notable contributions include:

- Voyage to the Moon: One of the early examples of match cutting and animation, pushing the boundaries of visual storytelling.

- Model Making: Artists began using physical models for special effects shots, creating visual illusions for cinematic worlds.

- Back Projection & Blue/Green Screen: Techniques that allow actors to perform in front of a screen while the background is digitally inserted, which paved the way for more immersive effects in film.

These innovations laid the groundwork for modern special effects, integrating traditional techniques with digital advances for unparalleled visual experiences.

Week 3 Written Post – Fakes or composites?

The Crown

The deer in the shot was created in 3D with attention to realistic fur grooming and detailed textures. It was then composited into a natural background, with the lighting carefully matched to blend the digital deer seamlessly into the environment.

The team at Framestore used depth of field to mimic how a real camera would capture the scene, blurring the background behind the deer. This effect, along with realistic lighting and shadows, made the digital model appear as if it were part of the live-action shot.

The composition is highly believable, with the deer placed naturally in its environment. The lighting and shadows match the real-world setting, and the depth of the scene gives the shot a lifelike feel. While it’s unclear if the Rule of Thirds was strictly applied, the shot feels balanced and draws attention to the deer in a natural way.

Key elements in the scene include:

- 3D Deer: Realistically modeled, textured and groomed.

- Grass and Terrain: Realistic natural environment.

- Background: A blurred, realistic backdrop matching the lighting.

- Lighting and Shadows: Consistent with the environment, adding realism.

- Realistic Textures: The deer’s fur and skin are carefully textured to feel lifelike.

The composite of the deer shot combines live-action plates and CGI elements. The live-action footage provides the background and natural environment, while the CGI elements include the 3D deer model, realistic textures, and added effects like depth of field. The compositing process integrates the digital deer with the live-action scene by matching lighting, shadows, and camera movement. The purpose of this composite is to create a seamless “impression of reality,” making the deer appear as though it truly exists within the natural environment. It achieves this by ensuring the lighting, perspective, and textures are consistent across both elements.

Framestore’s attention to detail in modeling, lighting, and compositing creates a convincing shot where the digital deer feels entirely integrated into the real-world setting, making it hard to distinguish whether it’s fully live action or CGI.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 4 – Photorealism in VFX

Key Characteristics of Photorealism:

- Detail and Texture: Photorealistic works feature intricate details, including textures that mimic real-life surfaces—like skin, fabric, and natural elements—captured with precision.

- Lighting and Shadows: Realistic lighting, including highlights and shadows, plays a crucial role. It often involves complex interactions of light sources, reflections, and ambient lighting.

- Colour Accuracy: The use of colour is closely aligned with reality, employing a full range of tones and subtle gradations to replicate how colours appear in different lighting conditions.

- Perspective and Depth: Accurate perspective creates a three-dimensional feel. Depth of field techniques can enhance realism by mimicking how cameras focus on different planes.

- Complexity in Composition: Photorealistic images often include complex compositions with multiple elements that are meticulously arranged to reflect reality.

- Attention to Realism in Representation: Unlike stylized or abstract art, photorealism focuses on depicting subjects as they appear in life, without exaggeration or alteration.

Distinguishing Photorealism from other styles of VFX

- Intent: While many styles may interpret or stylize subjects (like Impressionism or Surrealism), photorealism aims for accuracy and fidelity to reality.

- Technique: Techniques used in photorealism often involve meticulous planning, layering, and techniques like airbrushing or digital rendering to achieve a high level of detail, contrasting with looser or more expressive techniques in other styles.

- Viewer Experience: Photorealistic works can evoke different emotional responses, often aiming to impress the viewer with the sheer technical skill and detail, while other styles might prioritize emotional expression or conceptual ideas over realism.

- Medium Variety: Photorealism can be executed in various media, including painting, drawing, and digital art, whereas other styles may be more tied to specific mediums.

In your own words, define photorealism:

Photorealism is the artistic or technical goal of creating an image or scene that looks as realistic as possible, often to the point where it’s indistinguishable from a photograph. In visual effects and digital art, photorealism involves replicating the fine details of real-world materials, lighting, and textures to mimic how things appear in the physical world. This includes accurate reflections, shadows, surface imperfections, and the way light interacts with different objects and environments.

Achieving photorealism requires a deep understanding of how the real world works—how light behaves, how surfaces react to it, and how objects move or interact with their surroundings. In VFX, this means using advanced techniques like high-quality 3D modeling, realistic texturing, complex lighting setups, and precise rendering. The goal is to create digital scenes, characters, or objects that not only look lifelike but also behave naturally when integrated into a scene, making them feel part of the physical world.

In short, photorealism strives to create an illusion so convincing that the digital elements seem just as real as anything captured on camera, blurring the line between the digital and the real.

Trend of photorealism in VFX

The trend of photorealism in VFX really took off in the early 1990s with groundbreaking films like Jurassic Park (1993) and Terminator 2: Judgment Day (1991). These films proved that CGI could create lifelike effects that traditional methods struggled to achieve, like photorealistic dinosaurs and morphing metal, which could seamlessly interact with live-action elements. Jurassic Park especially showcased how CGI could blend with practical effects to create a fully believable world, which wowed audiences and set a new bar for realism in visual effects.

The late 1990s and early 2000s saw further innovation, with The Matrix (1999) and The Lord of the Rings trilogy (2001–2003) building on the foundation established by earlier films. The Matrix popularized the use of “bullet time” effects and brought hyperrealistic action sequences that felt groundbreaking. Meanwhile, The Lord of the Rings trilogy used motion capture to bring Gollum to life, marking one of the first times that a fully digital, photorealistic character had such an emotional and central role in a live-action film. This demonstrated how CGI could enhance character-driven storytelling, beyond just spectacular visuals.

These successes spurred demand for ever-more realistic effects, as audiences began expecting flawless, photorealistic integration of digital and live-action elements. Studios invested heavily in improving CGI techniques like ray tracing for more realistic lighting and reflections, as well as refining compositing techniques to ensure CGI blended perfectly with filmed environments. This era solidified photorealism in VFX as both a creative and technical goal, driving VFX studios to continuously push the boundaries of realism in film.

Today, photorealism in VFX has become essential, with studios using AI-driven tools, advanced simulations, and high-resolution texturing to create digital worlds that audiences believe are real.

How is photorealism in VFX achieved

Photorealism in VFX is achieved by using a blend of advanced techniques to make computer-generated elements look indistinguishable from real-world footage. It begins with creating highly detailed 3D models of characters, objects, and environments, often based on real-world data like scans or reference imagery. These models are then textured with intricate details, such as skin pores, fabric fibers, and subtle surface imperfections, and shaded to mimic how light interacts with various materials. Lighting plays a crucial role in photorealism, with digital lighting setups using global illumination to simulate realistic light behavior, such as how it bounces off surfaces and diffuses through materials.

Motion capture is used to record the natural movements of actors or animals, which are mapped onto CG models for realistic animation. Additionally, VFX artists simulate real-world physics, like gravity, fluid dynamics, and cloth behavior, to ensure digital elements interact believably with the environment. Once everything is created, compositing software is used to integrate the digital elements with live-action footage, making adjustments to lighting, shadows, and color to ensure everything blends seamlessly. The attention to small details, like reflections, atmospheric effects, and imperfections, finalizes the illusion of realism, creating a world where the digital and physical feel indistinguishable.

Compositing live footage with CGI

Compositing live-action footage with CGI for photorealism involves blending digital elements seamlessly into real-world scenes, making them look as if they were captured together. The process starts by matching the lighting and shadows of the CGI elements to the live-action footage. Artists replicate the direction, color, and intensity of the scene’s light sources so the CGI objects cast realistic shadows and interact with the environment. Camera matching is also crucial—using techniques like camera tracking, the 3D camera in the CGI scene is aligned with the live-action camera to ensure correct perspective and motion.

Color grading helps integrate CGI by adjusting the digital elements to match the color palette of the live footage, while depth of field and lens effects (like motion blur or distortions) are added to ensure the CGI blends naturally with the practical elements. Compositors also merge digital effects with practical ones, like explosions or smoke, so they interact convincingly with the environment.

Finally, subtle details like film grain, motion blur, and small imperfections are added to make the CGI feel as though it was shot with the same camera. When done correctly, these techniques create a seamless fusion of CGI and live-action that feels completely real.

Photorealistic CG renders

Creating photorealistic fully CGI scenes involves crafting every element—from environments to characters—entirely in the digital realm, without using live-action footage. It begins with highly detailed 3D modeling, where artists create lifelike digital representations of objects and environments, often based on real-world scans or reference materials. Textures are then applied to simulate realistic surfaces, including subtle details like dirt, scratches, and wear, which make the scene feel authentic.

Techniques to maek fictional creatures look photorealistic for example dragons mimicking lizard, bird anatomy to get a close look

The transition from the 2D animation of the original Lion King (1994) to the 3D CGI approach in the 2019 version represents a significant evolution in animation technology and storytelling.

2D Animation (1994)

- The original Lion King utilized traditional hand-drawn animation, characterized by vibrant colors and expressive character designs.

- This style allowed for exaggerated expressions and fluid movement, creating an emotional connection with the audience.

- The animation was complemented by a memorable soundtrack and a more stylized representation of the African savanna.

3D CGI Animation (2019)

- The 2019 version, while often referred to as “live-action,” is entirely created using CGI. It was developed with the help of visual effects studio MPC, pushing the boundaries of realism in animation.

- The photorealistic approach aimed to create a more immersive experience, showcasing lifelike animals and environments. This included meticulous detail in fur, skin textures, and natural lighting effects.

“Live Action” Misconception

- Disney’s marketing described the 2019 film as “live-action,” which can be misleading. While it features realistic visuals, every element is digitally rendered. The term evokes the idea of real actors and physical sets, which is not the case here.

- The film opens with a shot that mimics a live-action feel, showcasing the landscape in stunning detail. However, from that point on, the entire film relies on CGI, challenging the audience’s perception of what constitutes “live-action.”

Non-photorealistic Rendering – NPR

Non-photorealistic rendering (NPR) is a technique used in digital art and animation that prioritizes a stylized or artistic representation over lifelike realism. Instead of trying to replicate the exact details and textures of the real world, NPR embraces bold colors, simplified shapes, and exaggerated features to create a distinctive look.

This approach often draws inspiration from various art styles, such as illustration, painting, or comic books, allowing for creative expression and a unique visual identity. NPR can evoke emotions and convey character traits more effectively by emphasizing certain elements, such as facial expressions or dynamic movements, rather than focusing on realistic details.

Spider-Man: Into the Spider-Verse – NPR in film

Spider-Man: Into The Spider-Verse

Spider-Man: Into The Spider-Verse

Marvel Rivals – NPR in games

In the case of Marvel Rivals, the game utilizes vibrant colors, bold outlines, and exaggerated features that evoke a comic book aesthetic, which is characteristic of many Marvel properties. This approach allows for expressive character designs and dynamic visuals that enhance the overall gaming experience, making it feel more like stepping into a comic book rather than a realistic environment.

By opting for NPR, the game can emphasize character personalities and actions, creating a fun and engaging atmosphere that resonates with the comic book and superhero themes. This style contrasts sharply with photorealistic rendering, which aims for lifelike details and realism, highlighting the versatility and creativity of different artistic approaches in visual media.

Week 4 Weekly Post – Discuss types of photorealism

Photorealism in VFX varies in complexity between fully CG-created scenes and CG composites with live footage. CG composites, where digital elements are layered into live-action scenes, often achieve realism more easily because they draw on actual footage for reference. By matching lighting, shadows, and texture to the filmed environment, VFX artists can seamlessly blend digital elements, helping viewers accept the added effects as part of the real scene. This approach reinforces the narrative’s authenticity, as Martin Lister discusses in New Media: A Critical Introduction, by aligning digital effects with “narrative truth,” giving them a natural feel (Lister et al., 2018).

On the other hand, fully CG movies can sometimes appear artificial, as every aspect is digitally generated without real-world anchors. Without the natural irregularities and subtle cues found in live footage, fully CG scenes can occasionally seem “too perfect” or stylized, which may pull viewers out of the experience. However, this all-digital environment also provides unmatched creative flexibility. In films like The Lion King (2019), artists could craft every detail, from atmospheric lighting to highly controlled character expressions, allowing for creative freedom and intricate world-building. Barbara Flueckiger notes in Photorealism, Nostalgia, and Style that fully CG photorealism can evoke nostalgia and emotional depth by recreating classic cinematic textures, enriching the digital storytelling experience (North et al., 2015).

Ultimately, while CG composites may achieve realism more easily, fully CG scenes allow VFX artists to explore new, stylized, or imaginative worlds with a level of control and expression impossible in live-action composites.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 5 – Bringing indexicality to the capture of movement

Evolution of motion capture

The technique of using real-life movement for animation can be traced back to the early 1910s and the invention of rotoscoping, in which an actor is filmed and then drawn over by animators frame by frame to replicate the motion. Films such as Disney’s Snow White and the Seven Dwarfs (1937) used rotoscoping to create realistic movements for their characters. Moreover, this early motion-capture process helped to streamline production, and Snow White became one of the first feature-length animated films to be released in American theaters.

Disney also participated in the next breakthrough in motion-capture technology. Thanks to the invention of a rudimentary motion-capture suit by engineer and animator Lee Harrison III, Disney patented a system in the mid-1960s to record actors’ movements using potentiometers attached to the performers’ suits . These gauges gathered movement data, which could then be used on animatronics in Disney theme park attractions. However, the technology was overly cumbersome, making it impractical for most productions.

Nevertheless, with the continued development of smaller processing units, the evolution of proprietary software, and the decreasing cost of production elements, by the late 1980s and early ’90s motion capture had come to be seen as a new frontier with real creative potential. Today the technology is used in a wide variety of industries and entertainment outlets. Motion capture’s increased popularity has made the process less time-consuming for creators, and the accuracy of the captured data has given performers a powerful new way of communicating with their audience.

Capture Trends

- Motion Capture

- Facial Capture

- Motion tracking, match moving

- Scans (Lidar, Megascans)

- Photogrammetry

- HDRI

Unique examples of ways in which motion capture is being used:

Stray Game – Cat Motion Capture

Stray – Game

The Call of the Wild Exclusive Behind the Scenes – Dogs in MoCap (2020) | FandangoNOW Extras

Written Post 5 – Compare keyframe animation to motion capture

Preparatory Reading: More than a Man in a Monkey Suit: Andy Serkis, Motion Capture, and Digital Realism by Tannie Allison (2011)

Preparatory watching: Andy Serkis Interview for WAR FOR THE PLANET OF THE APES – JoBlo Movie Trailers

Animation from motion capture is data driven – Keyframe animation is created by hadn

Keyframe animation is iconic – its animation that represents something (we can think of a walk cycle representation)

Motion capture animation is indexical it points towards a walk that has happened (the motion capture would not exist without it)

-

- How do you think the two approaches / technologies are the same or different, where do they align and where do they not?

- Think about the motion data stored and used in motion capture files, do you think it is indexical? How does it bring the real

- Feel free to illustrate your post with example images connected to the subject

Motion Capture and Key Frame Animation are essential techniques in animation, each suited to different creative needs. MoCap, which records live actors’ movements and expressions, is ideal for realistic human motions and authentic emotions, while Key Frame Animation excels in animating stylized or non-human characters with imaginative, exaggerated movements.

A fantastic example of MoCap’s effectiveness can be seen in the Planet of the Apes, where characters like Caesar convey complex emotions, such as anger and empathy. By capturing subtle details in facial expressions and body movements, MoCap allows audiences to form a genuine connection with non-human characters, adding significant emotional depth and relatability.

However, for non-human creatures, MoCap may fall short, as shown in Mowgli, where using MoCap for animal characters resulted in unnatural expressions—a phenomenon known as the “uncanny valley.” In these cases, Key Frame Animation is often more effective, allowing animators to exaggerate movements and create more expressive, natural behaviors, avoiding the awkwardness of imposing human motion onto animals.

A unique example of MoCap used effectively on a completely non-human character can be seen in The Hobbit, where the dragon Smaug was brought to life through combining both techniques. MoCap captured Smaug’s facial expressions, infusing him with personality, while Key Frame Animation controlled his dynamic flights and gestures. This blend—using MoCap for facial details and Key Frame Animation for body movements—struck a balance between realism and fantasy, making Smaug both relatable and vividly fantastical.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 6 – Reality Capture (LIDAR) & VFX

Reality Capture

Reality capture is an umbrella term that encompasses a range of technologies and methods used to digitally document and recreate the physical world in three dimensions. This field has gained significant traction across various industries, including architecture, engineering, construction (AEC), film, gaming, and cultural heritage preservation, thanks to advancements in both hardware and software. The main techniques involved in reality capture include laser scanning, photogrammetry, depth sensing, 360-degree imaging, and motion capture, all aimed at creating accurate digital representations of real objects and environments.

What types of reality capture are there?

Lidar

LiDAR, which stands for Light Detection and Ranging, is changing the landscape of visual effects (VFX) by allowing filmmakers to capture and recreate environments with remarkable precision.

The technology operates by sending out laser pulses that measure distances based on how long it takes for the light to bounce back after hitting surfaces. This capability enables the generation of highly detailed 3D models, which are crucial for achieving realism in visual effects.

The process starts with data capture, where LiDAR systems can be used in various ways, such as being mounted on drones or set up on the ground. As these laser pulses scan the surroundings, they create a point cloud—a dense collection of points in three-dimensional space. Each point corresponds to a specific location and includes information about its position and sometimes its color. This point cloud forms the basis for developing digital models, allowing artists to accurately represent everything from landscapes to intricate architectural details.

After the point cloud is created, artists convert it into a polygonal mesh, turning the raw data into a usable format for VFX production. This step is critical, as it transforms the abstract points into a tangible 3D model. Artists then apply textures, often using color data from the LiDAR readings or additional photographs. This meticulous process ensures that the digital models seamlessly integrate with live-action footage, creating a cohesive visual experience.

LiDAR is especially beneficial for crafting complex environments, like bustling cityscapes or lush forests. Its high level of accuracy allows for realistic representations of real-world elements, enhancing the overall quality of the visual effects. Additionally, because LiDAR can capture large areas quickly, it streamlines the modeling process, freeing artists to focus more on creative aspects rather than getting bogged down in technical details.

Beyond modeling, LiDAR data is also invaluable during pre-visualization (previs). Filmmakers can leverage this information to plan shots and understand spatial dynamics within a scene before actual filming takes place. This advance planning not only saves time during production but also helps ensure that the final product aligns with the director’s vision.

LiDAR is a powerful tool in contemporary visual effects, blending accuracy with artistic creativity. By equipping artists with detailed and reliable data, it enhances the quality of digital content and contributes to a more immersive cinematic experience. As technology progresses, the role of LiDAR in VFX will likely continue to grow, opening up new avenues for filmmakers and visual creators.

Example of usage – Jurassic World: Fallen Kingdom

Photogrammetry

Photogrammetry works by capturing a series of overlapping photographs of a real-world object or environment from multiple angles, which are then processed using specialized software to create a 3D point cloud that represents the structure of the subject. This point cloud is transformed into a mesh, defining the object’s shape, and the original images are used to generate realistic textures that enhance the model’s appearance. Once the detailed 3D model is created, it can be imported into VFX software for animation, lighting, and integration into live-action footage, allowing for seamless blending of digital and practical elements. Photogrammetry is particularly valuable for creating accurate environments, props, and character assets, enabling VFX artists to produce highly detailed and immersive visuals that elevate the storytelling experience in films and games.

Example of usage: Avatar

Depth Based Scanning

Depth-based scanning is a method used in visual effects to capture three-dimensional information about objects and environments, providing a crucial layer of detail that enhances the realism of digital assets. This technique typically involves using depth sensors or cameras, such as LiDAR (Light Detection and Ranging) or structured light systems, to measure distances between the sensor and various points in the scene.

The process begins with the depth sensor emitting signals—either lasers or infrared light—which bounce back after hitting surfaces. By calculating the time it takes for these signals to return, the system can determine the distance to each point, creating a dense point cloud that represents the shape and structure of the environment or object.

Once this data is captured, it can be processed into a 3D model, similar to photogrammetry, but with a greater focus on capturing depth information. The resulting models are often highly detailed, with accurate representations of geometry that can be used in VFX productions.

Depth-based scanning is particularly beneficial for capturing complex geometries and intricate details in real-time, making it ideal for virtual production and augmented reality applications. By integrating these detailed 3D scans with CGI elements, VFX artists can create more immersive and convincing scenes that seamlessly blend digital and practical effects.

Overall, depth-based scanning enhances the VFX workflow by providing precise spatial information, allowing for better integration of assets and a more realistic portrayal of environments and characters in film, television, and gaming.

Example of usage: Gravity (2013)

In “Gravity” (2013), depth-based scanning was crucial for creating the film’s stunning visual effects and realistic portrayal of space environments. The filmmakers utilized a virtual production process that seamlessly combined live-action footage with CGI. By employing depth-based scanning, they were able to create precise 3D models of the spacecraft and space debris, allowing for realistic interactions between the live actors and the digital environment.

Advanced camera tracking techniques captured the movement of the actors in a controlled studio setting, with depth sensors ensuring that the CGI elements aligned perfectly with the live-action footage. This depth information also enabled more sophisticated lighting effects, mimicking how light behaves in the vacuum of space and contributing to the film’s dramatic visuals.

Additionally, the accurate mapping of depth and movement helped simulate weightlessness, making the scenes of floating in space appear more believable. Overall, depth-based scanning was integral to blending live-action with CGI, resulting in an immersive and visually striking cinematic experience.

Find an example of a 3D scanning project of each type. Put an image of each kind on your sketchbook, caption the image with a title of the project and a line of description.

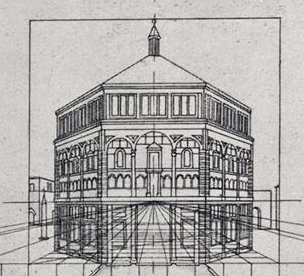

Analogue Perspective

Filippo Brunelleschi, a key figure of the Italian Renaissance, is credited with developing the system of linear perspective, which revolutionized the way space and depth were represented in art. His innovations laid the groundwork for realistic representation in painting and architecture, profoundly influencing the course of Western art.

The Principles of Linear Perspective

Brunelleschi’s system of perspective is based on a few fundamental principles:

- Vanishing Points: Central to Brunelleschi’s perspective is the concept of a vanishing point, where parallel lines appear to converge in the distance. This point helps to create the illusion of depth on a flat surface.

- Horizon Line: The horizon line represents the viewer’s eye level. Objects above this line recede upwards, while those below seem to sink downwards, establishing a sense of spatial orientation.

- Orthogonal Lines: These lines lead to the vanishing point and guide the viewer’s eye into the depth of the composition. By aligning objects along these lines, artists could create a coherent spatial relationship among various elements in the scene.

Brunelleschi’s Experiment

Brunelleschi demonstrated his perspective techniques through a famous experiment involving a painting of the Baptistery in Florence. He created a small painting and used a mirror to reflect it, allowing viewers to compare the painted image with the actual scene. This ingenious method illustrated how his perspective principles created a convincing illusion of three-dimensionality.

Impact on VFX

De Pictura (1450) Principles

“De Pictura,” written by Leon Battista Alberti in 1450, is a seminal text that explores the principles of painting and the representation of space. In this work, Alberti emphasizes the importance of perspective, particularly linear perspective, which allows artists to create the illusion of depth on a flat surface. He introduces the idea of a vanishing point, where parallel lines seem to converge, helping to make paintings look more realistic.

Alberti also discusses how to compose a painting, suggesting that artists use geometric shapes to organize their scenes in a harmonious way. He believed that a well-structured composition could enhance the beauty and emotional impact of a work.

Another key aspect of “De Pictura” is Alberti’s focus on the viewer’s experience. He encourages artists to consider how the audience will engage with the artwork, likening a painting to a window that opens up to another world. This perspective invites viewers to immerse themselves in the scene.

Overall, “De Pictura” provides foundational ideas about perspective, composition, and viewer engagement that shaped the practice of painting during the Renaissance and influenced many artists in the years that followed.

The principles outlined in “De Pictura” by Leon Battista Alberti are foundational to the understanding of perspective and composition in painting.

- Linear Perspective: Alberti introduced the concept of linear perspective, where parallel lines appear to converge at a single vanishing point on the horizon. This technique creates the illusion of depth and three-dimensionality on a flat surface.

- The Vanishing Point: The vanishing point is the point in the composition where lines converge, guiding the viewer’s eye into the depth of the scene. It is crucial for establishing spatial relationships in a painting.

- Geometric Composition: Alberti emphasized the use of geometric shapes to structure a painting. He encouraged artists to arrange figures and objects in a way that creates harmony and balance, using triangles, squares, and circles to organize the composition.

- Viewer’s Perspective: Alberti highlighted the importance of considering the viewer’s position when creating a painting. He believed that the artwork should invite the viewer to engage with the scene, making the viewer feel like they are peering through a “window” into another world.

- Proportion and Scale: Maintaining proportion and scale is essential for achieving realism. Alberti advised artists to carefully consider the size of objects in relation to one another and their placement in the overall composition.

- Light and Shadow: The treatment of light and shadow (chiaroscuro) is important for creating volume and depth. Alberti encouraged artists to observe how light interacts with forms and to replicate that in their work to enhance realism.

Emotional Impact: Alberti believed that a well-composed painting should evoke emotion. The arrangement of figures, the use of perspective, and the play of light should all work together to create a compelling narrative or feeling within the artwork.

![PDF] Bringing Pictorial Space to Life: computer techniques for the analysis of paintings | Semantic Scholar](https://figures.semanticscholar.org/552d5b92027184900e52107a988b46cc3939c529/11-Figure2-1.png)

Pictoral Space

Pictorial space refers to the way artists create the illusion of depth and three-dimensionality on a flat surface, like a canvas. In “De Pictura,” Alberti explores several techniques that help achieve this illusion.

One key method is linear perspective, where lines converge at a vanishing point, making objects appear to recede into the distance. This creates a more realistic sense of space. Another important technique is foreshortening, which alters the size and angle of objects to suggest they are closer or further away, enhancing the three-dimensional effect.

Overlapping elements is another strategy; when one object overlaps another, it indicates which is in front, helping to establish spatial relationships. Atmospheric perspective also plays a role, where distant objects appear lighter and less detailed due to the effects of the atmosphere, while closer objects are richer in color and detail.

Scale and proportion are crucial as well—by adjusting the size of objects relative to each other, artists can create a convincing sense of depth. Finally, Alberti emphasizes that pictorial space should engage the viewer, inviting them to feel as if they are stepping into the scene.

Overall, pictorial space involves various techniques that work together to turn a flat image into a dynamic representation of a three-dimensional world, making the artwork more immersive and engaging.

Da Vinci Points

Perspective Machines

Digital Perspective

Analogue Perspective vs Digital Perspective

Why scanned data needs 3D computer space?

A bridge between physical and digital worlds

Environments – LiDAR scanning captures detailed textures

Objects and props – Small to mid scale LiDAR and structured light scanners allow artists to scan individual props, costumes, or characters.

Human and creature capture – increasing use of 3D scanning for digital doubles provides a foundation for realistic character animations.

Workshop Activity:

Analysis Activity – LiDAR Scan vs Photograph

Find a LiDAR scan image online. Look for scans of landscapes, buildings or famous landmarks like the Eiffel Tower or forests. Good sources include sketchfab or scientific/ architectural websites

Describe key visual characteristics:

- Point Cloud: LiDAR scans often appear as a collection of dots or points, not continuous surfaces.

- Depth and Structure: They show shape and depth well but lack colour and texture details found in photos.

- Wireframe Effect: Many scans have a skeletal, wireframe look, emphasising structure over surface details.

Compare to a photograph:

- Surface and Texture: Photos

Week 6 – Case Study on Reality Capture Technology in Preserving Ukrainian Cultural Heritage

In response to the ongoing conflict in Ukraine, advanced reality capture technologies, like 3D laser scanning and LIDAR, have become crucial tools for preserving the country’s cultural heritage. These technologies enable the creation of precise digital replicas of historic buildings, monuments, and landmarks, allowing for their preservation in virtual form, even if they are physically damaged or destroyed.

LIDAR works by emitting laser pulses that bounce back from surfaces, allowing it to measure distances and capture millions of data points to create an accurate 3D model of an object or site. This technology excels at documenting complex details, such as architectural features, that may be difficult to capture with traditional methods. When combined with photogrammetry (the use of photographs to add texture to the 3D model), LIDAR can generate a complete, realistic digital representation of the scanned environment. These 3D models are then stored digitally, making them accessible for future restoration or historical research.

A real-world example of this technology in action is the preservation efforts for Ukrainian landmarks like Kyiv Pechersk Lavra and St. Sophia Cathedral. These sites, which face the threat of destruction due to the ongoing war, have been digitally documented by organizations like CyArk and the Ukrainian Cultural Heritage Preservation Fund. The digital models not only help safeguard the sites’ historical value but also provide a tool for reconstruction if needed in the future.

Despite challenges like the high cost of LIDAR equipment, the impact of this technology is profound. It ensures that even if physical sites are lost, their cultural significance can still be preserved digitally for future generations.

Archangel Michael Church, Pidberiztsi

Sources:

3D Memory: Scanning Damaged Heritage Sites in Ukraine | Leica Geosystems

(305 words)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

Week 7 – Reality Capture (Photogrammetry) & VFX

Digital Facsimile

Quixel Megascans

Digitising the world for photorealism

quixel boasts global scanning teams

- anything and everything in the world becomes a potential commercial asset to be captured and stored as a digital resource

- catalogued and classed in ready to. use sets and libraries

- Bergman states that photorealism is now incredibly simple because it can be created quickly using 3d scans from quixels library

a paradox?

Digital 3D Facsimile

using techniques lik ephotogrammetry scanning a digital 3d facsimile replaced the appearance shape texture and sometimes even the material properties of the original object as closely as possible

digital facsimiles created from real world objects or locations vfx artists can use these as photorealistic digital props

virtual sets and backgrounds can be created from detailed 3d scans of buildings landscapes or iconic settings that can serve as …

Digital Doubles

Digital doubles, created through photogrammetry, are highly detailed 3D models of real-life people or objects used in films and video games. Photogrammetry is a process that involves capturing a series of photographs from different angles and then using software to stitch them together into a 3D mesh. The result is a highly accurate digital replica with realistic textures, which can be animated or integrated into scenes as a stand-in for the actual person or object.

In filmmaking, digital doubles are often used for stunts, action scenes, or situations that are too dangerous or impractical for an actor to perform. For example, if an actor is meant to jump from a great height or be in a hazardous environment, a digital double can take over those physical risks. The process captures intricate details, like skin texture and facial features, making these digital doubles incredibly lifelike.

As photogrammetry technology advances, the digital doubles are becoming almost indistinguishable from real people, allowing filmmakers to push the boundaries of what’s possible in storytelling.

The first time, it seems, that a digi-double was used in a film, was in Batman Forever (1995), with cape and all.

Digital Replicas

Where does this scanning trend come from?

- Need for Realism: As audiences demand more immersive and lifelike visuals, filmmakers have turned to these technologies to create highly detailed and accurate digital assets. Whether it’s a digital double of an actor or a virtual environment, these techniques allow for unprecedented realism, capturing every detail from skin texture to environmental nuances.

- Efficiency and Speed: Traditional methods of creating 3D models by hand are time-consuming. Scanning technologies, on the other hand, can quickly capture real-world objects or locations in high detail, significantly speeding up the process of creating complex digital assets.

- Cost-Effectiveness: Although the initial investment in scanning technology can be high, it ultimately saves money in the long run. By reducing the need for expensive set constructions or the risk of performing dangerous stunts, scanning can lower production costs.

- Improved Integration with Live Action: Scanned assets, especially with technologies like LiDAR, allow for more accurate matching of digital elements with real-world footage. This makes it easier to blend CGI seamlessly with live-action shots, resulting in more convincing VFX.

- Advances in Technology: The availability of better hardware (like high-resolution cameras, LiDAR scanners, and processing power) and more advanced software has made scanning more accessible and precise. This has made it a go-to method for creating complex digital assets that look real and fit seamlessly into films.

- Flexibility in Virtual Production: In modern filmmaking, especially with virtual production techniques, having highly accurate scans of real-world elements allows for greater flexibility. Filmmakers can digitally manipulate scanned environments or characters without having to worry about the physical limitations of sets or actors.

The Digital Michelangelo Project

Simulation

VR

Game Worlds

Google Street View, Google Maps

The image precededes reality

Simulacra & Simulation

Simulacra and Simulation by Jean Baudrillard is a key work in postmodern philosophy, where Baudrillard examines how modern society has shifted from experiencing reality to living in a world of simulations—copies that no longer refer to anything real. His central argument is that, in the age of mass media and technology, we are increasingly surrounded by “simulacra,” which are representations or images that have no original referent or reality behind them.

Baudrillard outlines four stages of simulacra:

- The image is a faithful copy of a real thing.

- The image distorts reality, masking a basic truth.

- The image pretends to be a reality, but hides the absence of any original.

- The image has no connection to any reality, and becomes its own truth (hyperreality).

In hyperreality, simulations—like media, advertisements, and virtual worlds—are experienced as more real than reality itself, to the point where people can no longer distinguish between the two. Baudrillard argues that this shift leads to a collapse of meaning and truth, as we live in a world dominated by images that create their own version of reality.

The book critiques how media and consumer culture shape our perceptions, replacing authentic experience with manufactured representations. Baudrillard’s work challenges the idea that media simply reflects reality; instead, it creates a new reality that people accept as the truth. Ultimately, Simulacra and Simulation explores how this detachment from the real undermines our ability to critically engage with the world.

The map comes before the world

In Simulacra and Simulation, Jean Baudrillard introduces the idea that “the map comes before the territory” as a way of illustrating how, in contemporary society, representations no longer merely reflect or mirror reality—they precede and construct it. This concept challenges the traditional idea that a map (a representation) is a tool used to depict an existing, real world (the territory). Instead, Baudrillard argues that in the postmodern era, representations, such as media images, advertisements, and digital simulations, no longer just reflect the real world; they actively shape and define how we perceive and experience reality itself.